AVOID:

UNEVEN NON-UNIFORM LIGHTING CONDITIONS

SHADOWS AND REFLECTIONS

CHANGING LIGHT CONDITIONS

Lighting your subject

When capturing our object or scene lighting conditions are critical to get right as dependent on the 3D capture device, tool and methodology used lighting the subject may not be editable after the capture.

Ideally, we aim for even lighting whenever we are 3D scanning avoiding any low light, hotspots or potentially reflective or refracted light that may change over time or as we move around capturing the subject. A consistent light source with even diffusion is what we are after with minimal shadows. We have to be careful with what texture details will be possibly baked onto our model and what is within the scene so we may need to ensure lighting is far enough away from the scanned subject so as not to be captured during the capture process.

It can be an advantage where it is possible to have static lighting or use natural light to illuminate the subject, rotating the subject reduces the chance of creating shadows however for larger subjects and scenes this may not be possible.

Space/Distance

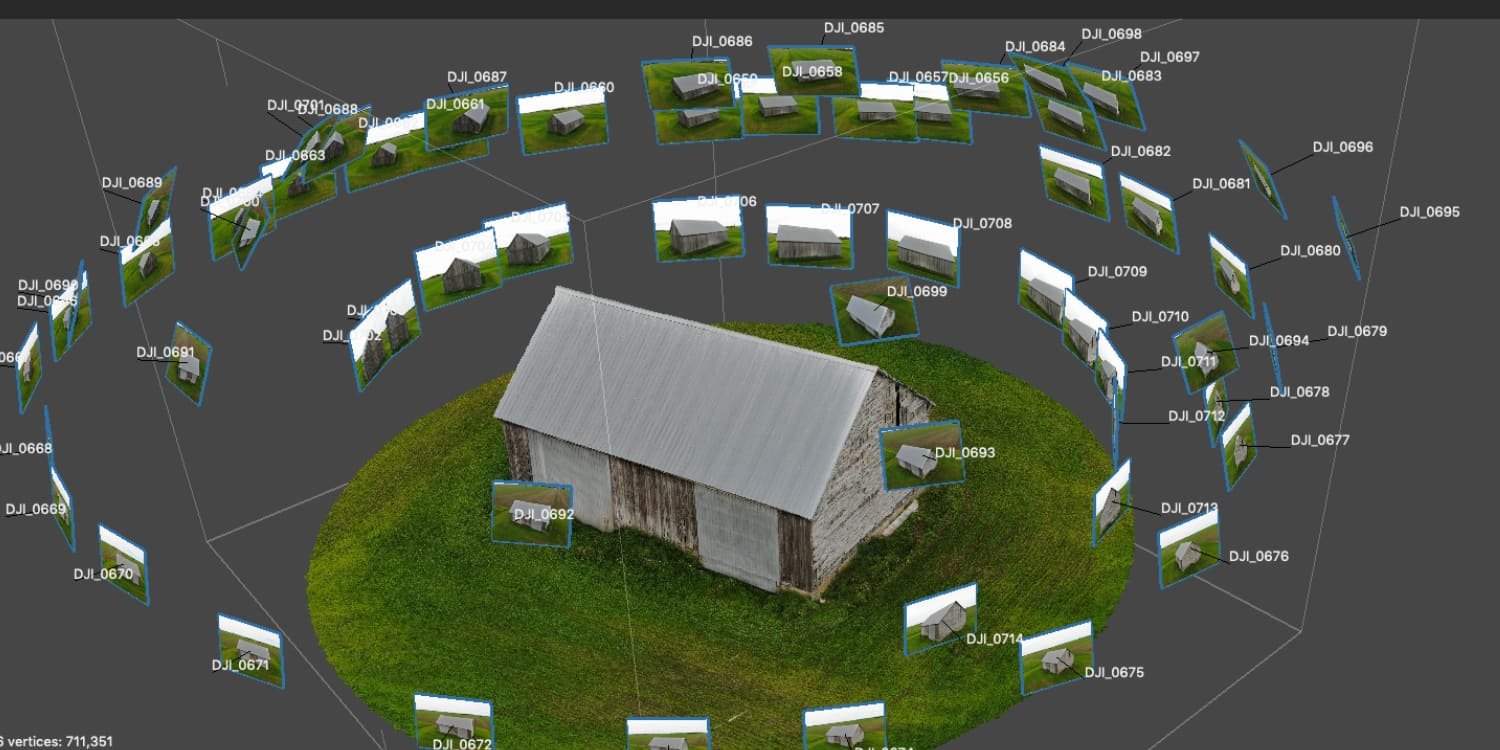

We need to think about the distance we are from the subject when capturing the scene/subject as we will need to be able to move around the space to capture all angles for a complete 3D capture. We will be orbiting the subject at various heights and distances to capture distance and close-up details. It is not unusual for 3D captures to have upwards of 200 references (photogrammetry, NeRF).

We want to ensure images overlap so previous trackable points are in the frame to ensure computations are as accurate as possible as we need to understand the context of relative positions within 3D space.

Motion

When capturing our object or environment we need to try and ensure we capture as much of the subject as possible within the frame avoiding fast movements and blurred images, we tend to orbit the subject at various heights and various heights. We will then capture any closeup details or possible gaps that could be formed.

We also need to ensure the subject/scene does not move or change between captured frames, which could cause the 3d calibration and stitching process to fail and generate abstract shapes and data. The more images we capture be it from video or stills the more accurate the generation can become but the longer it will take to process.

Textures

We have to consider the texture/surface of the subject we are capturing, we want to avoid reflective, transparent or highly repetitive textures and surfaces as 3D capture processing struggles with these surfaces.

If capturing reflective or transparent surfaces we need to ensure surfaces have texture, for example for clear surfaces, we need to apply a textured coating so photogrammetry software has reference. Reflective surfaces are difficult as data changes dependent on the angle of capture (refraction) and repeating data could be incorrectly interpreted as being in the wrong 3d space.

Think Optimally

We are at all times trying to optimise our data/model generation as the larger the file generation the more polygons or point cloud data that needs to be computed and processed and the more demanding it will become on the system.

This is why Resolution and frame rate is important we want to ensure we use as high a quality camera as possible to increase accuracy as low-resolution images and low-light subjects would be harder to generate and capture and result in poor outputs. All the photos or video references SHOULD come from one device, do not switch lenses or settings between image takes as this can result in generation failure.

If capturing a person think about what pose you are capturing and whether the subject can retain that pose for a period of time accounting for blinking, breathing and micro-movements. I would personally focus on the front of the subject’s face first to get that captured as quickly as possible and ensure the subject can blink anytime the camera is not pointing at the face.